NVIDIA just hit $4.9 trillion in market cap for the first time this month 1. Meanwhile, OpenAI is burning through cash at an incredible pace—losing about $5 billion in 2024 on $3.7 billion in revenue—while still commanding a $157 billion valuation 2.

We’re so busy watching the AI hype cycle that we’re not asking the right question. It’s not whether NVIDIA’s chips are the best (they are, for now) or whether ChatGPT will keep growing users (maybe). The real question is simpler and way more important: where is the actual money going in this AI gold rush? Because right now, NVIDIA is pulling in $44.1 billion per quarter selling the picks and shovels 3, while the companies everyone thinks are “winning” AI are hemorrhaging cash faster than a startup with unlimited VC funding. Something doesn’t add up—and that’s exactly what we need to figure out.

The Great AI Profit Paradox

Here’s the number that should make every investor pause: AI companies received over $100 billion in venture capital funding in 2024, an increase of over 80% from $55.6 billion in 2023 4. That’s not a typo. We’re talking about more money flowing into AI startups in a single year than most countries’ entire GDP.

But here’s where it gets interesting. While 42% of U.S. venture capital was invested into AI companies in 2024, up from 36% in 2023 and 22% in 2022 5, the actual revenue story is completely different.

So let’s do some basic math here. The combined revenues of leading AI companies grew by over 9x in 2023-2024 6, but we’re still looking at maybe $5-6 billion in total revenue across all the big foundation model companies. Meanwhile, AI venture capital funding surged past $100 billion in 20244.

That’s a 20:1 ratio of investment to actual revenue. In any other industry, we’d call this what it is: a massive bubble where money is flowing to companies that have no clear path to profitability.

But here’s the thing everyone’s missing while they’re watching these companies burn cash: the money isn’t disappearing into thin air. It’s flowing somewhere. And the companies actually capturing that cash aren’t the ones getting the headlines.

The real question isn’t whether companies like OpenAI will eventually figure out how to make money (though that’s fascinating). The real question is: who’s getting rich off this $100 billion AI gold rush right now, today, while everyone else is playing venture capital roulette?

Let’s follow the trail.

The Shovel Sellers (Hardware Giants)

The answer to where AI money actually lands starts with understanding what every AI company needs but can’t build themselves: the hardware to run their models.

NVIDIA reported $44.1 billion in revenue for Q1 2025, up 69% from a year ago 3. But here’s the detail that matters: $47.5 billion of NVIDIA’s revenue in fiscal 2024 came from data centers 7, while their traditional gaming business generated just $10.4 billion 8. The company has effectively transformed from a gaming chip maker into the infrastructure backbone of artificial intelligence.

The economics are straightforward. A single H100 GPU costs around $25,000, while multi-GPU setups can exceed $400,000 9 The newer H200 chips command even higher premiums, with cloud pricing ranging from $3.80 to $10.60 per GPU-hour compared to roughly $5 per hour for H100s 10. Companies aren’t just buying a few of these chips – they’re buying them by the thousands.

Meta alone had plans to have 600,000 H100-equivalent chips by the end of 2024, including 350,000 actual H100s plus H200s and newer Blackwell chips 11, 12. At $25,000 per chip, that’s approximately $9 billion in hardware just for Meta’s AI infrastructure 11. When you scale that across Microsoft, Google, Amazon, and hundreds of smaller AI companies, you start to see where NVIDIA’s revenue growth comes from.

The competitive landscape tells the same story—but with added nuance. In 2023, the global data-center AI-chip market totaled about $17.7 billion. NVIDIA held approximately 85% of the AI accelerator and GPU market, AMD commanded around 10%, and Intel accounted for roughly 5% 13.

| Company | Market Share (2023, AI Accelerators/GPUs) | Key Notes |

|---|---|---|

| NVIDIA | ~85% | Dominated with H100 GPUs; CUDA ecosystem drove adoption. |

| AMD | ~10% | MI200 series gained traction in cost-sensitive deployments. |

| Intel | ~5% | Gaudi 2 had limited adoption; focused on enterprise AI. |

Yet even those figures align closely with NVIDIA’s dominance in GPUs specifically, where it controlled about 90% of the data-center GPU market according to Bloomberg 13.

The real advantage for NVIDIA isn’t just performance—it’s timing and ecosystem lock-in—thanks to its CUDA software stack, full-stack hardware integration, and rack-level systems, which together form significant entry barriers for competitors

Every major cloud provider is trying to reduce their dependence on NVIDIA chips. Amazon has Trainium and Inferentia. Google has TPUs. Microsoft is investing in custom silicon. But here’s the problem: while they’re building alternatives for tomorrow, every AI company needs to train and run models today. And today means NVIDIA.

NVIDIA’s gross margins rose to 78% in their latest quarter, driven by selling more data center products. Those aren’t the margins of a commodity hardware company. Those are the margins of a company with pricing power in a supply-constrained market where demand continues to outstrip availability 14, 15.

The model is elegant in its simplicity: NVIDIA gets paid upfront, in cash, for physical products that AI companies need immediately. There’s no customer acquisition cost, no churn risk, no complex pricing negotiations about usage-based billing. Companies order chips, NVIDIA ships them, money changes hands. While AI startups burn through funding trying to figure out unit economics, NVIDIA has already been paid for the infrastructure that makes their experiments possible.

But NVIDIA’s dominance depends on a supply chain where TSMC, ASML, and other players are also collecting billions. The hardware layer isn’t just about who designs the best chip—it’s about who controls the manufacturing, memory, and packaging capacity that determines how many chips reach the market. This is what a real AI business model looks like: predictable demand, high margins, supply constraints that create pricing power, and customers who have no choice but to pay premium prices at every layer of the stack.

The Supply Chain Behind the Dominance

NVIDIA’s $44 billion quarterly revenue doesn’t exist in isolation. Behind every AI chip is a supply chain where multiple companies are capturing significant value—and creating bottlenecks that give them pricing power.

TSMC: Manufacturing the Winners

TSMC reported $33.1 billion in Q3 2025 revenue, up 40.8% year-over-year 16, with AI/HPC demand accounting for 60% of their revenue, up from 53% in Q3 2024 17. NVIDIA doesn’t manufacture its own chips—TSMC does. And TSMC also manufactures for AMD, Google’s TPUs, and Amazon’s custom silicon. They profit regardless of who wins the AI hardware race.

The Equipment Layer: ASML’s Quiet Monopoly

Behind TSMC’s manufacturing capabilities sits another bottleneck: ASML’s extreme ultraviolet (EUV) lithography machines. ASML forecasts annual revenue between €44 billion and €60 billion by 2030, with gross margins between 56% and 60% 18. Each EUV machine costs around $200 million, with the most advanced systems approaching $400 million 19. ASML holds a monopoly on the technology needed to manufacture the most advanced chips—meaning TSMC, Samsung, and Intel all depend on the same Dutch equipment supplier.

The Packaging Constraint

Advanced packaging has emerged as another critical bottleneck. TSMC’s advanced packaging capacity for 2024 and 2025 was fully booked by just two clients—NVIDIA and AMD, with NVIDIA securing over 70% of TSMC’s CoWoS-L capacity for 2025 20. Companies aren’t just competing on chip design—they’re competing for access to the manufacturing capacity that determines how many chips can actually reach the market.

The pattern is clear: NVIDIA may dominate AI chip sales, but TSMC, SK Hynix, and ASML are capturing billions by controlling the manufacturing, memory, and packaging that make those chips possible. Each represents a chokepoint where supply constraints translate directly into pricing power and profit margins that rival NVIDIA’s own.

The Model Makers (Foundation Model Companies)

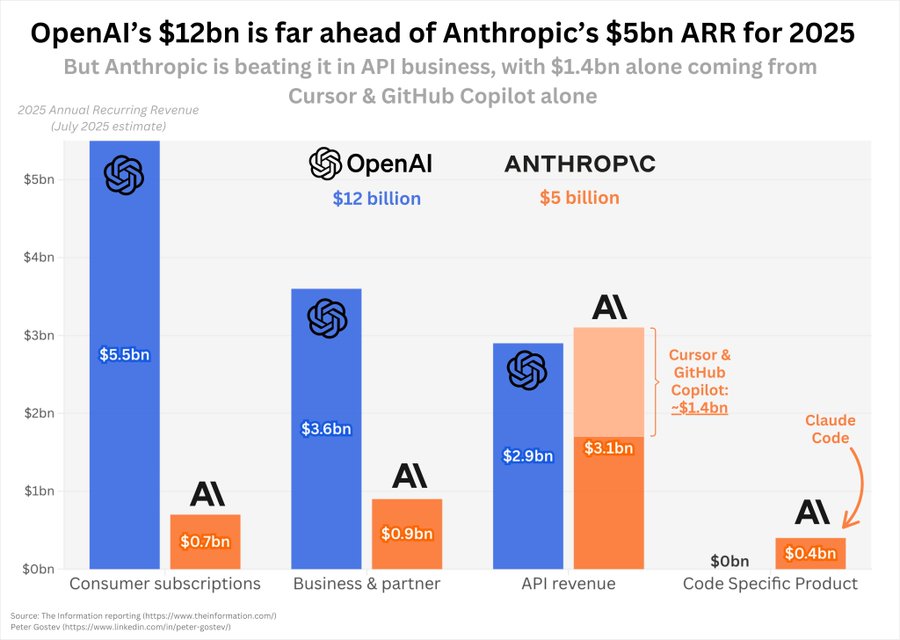

While hardware companies collect upfront payments, the foundation model business tells a different story—one where revenue is growing rapidly but profitability remains elusive, and the competitive landscape is shifting in unexpected ways. OpenAI’s revenue trajectory looks impressive on the surface: annualized revenue surged to about $13 billion by July 2025, tripling from around $4 billion in 2024 21.

Meanwhile, Anthropic hit $3 billion in annualized revenue, with earlier reports showing growth from $1 billion at the end of 2024 to about $3 billion by end of May 2025 22.

Anthropic’s recent growth comes with concerning dependencies: the company’s revenue is heavily tied to just two large customers, and the startup expects to lose $3 billion in 2025 23. Despite hitting billions in annualized revenue, Anthropic’s models remain “utterly unprofitable” 24.

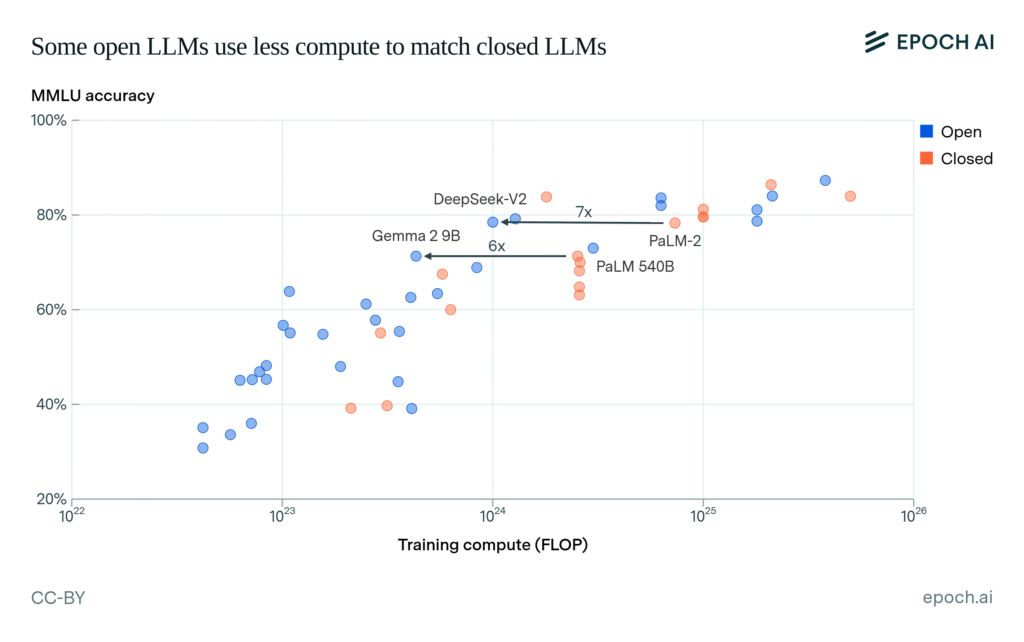

But the unit economics tell a more complex story. The cost of outputs from OpenAI’s GPT-4o today is roughly 100 times less per token than GPT-4 when it debuted in March 2023, and Google’s Gemini 1.5 Pro now costs about 76% less per output token 25. This represents genuine progress in efficiency, but it’s happening alongside a broader price war that’s compressing margins across the industry.

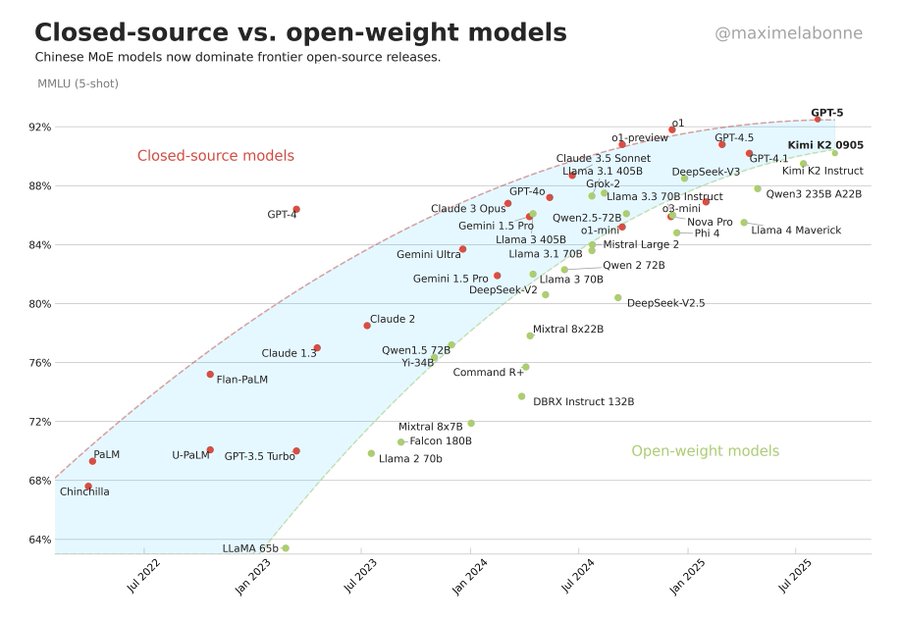

The Open Source Disruption

The competitive dynamics changed dramatically in early 2025 when Chinese companies began releasing models that challenged the assumption that cutting-edge AI required massive Western budgets. DeepSeek’s R1 and V3 models, released in January 2025, disrupted the industry by achieving performance comparable to leading Western models at dramatically lower costs, proving that effective AI development doesn’t require massive budgets 26, 27.

Alibaba’s Qwen team acknowledged the scaling insights disclosed with DeepSeek V3’s release, showing how the industry is rapidly sharing and building on technical breakthroughs. These open-source alternatives don’t just compete on performance – they fundamentally challenge the capital-intensive model that Western AI companies have built their valuations around 28.

Meta’s approach with Llama represents this strategy taken to its logical conclusion. By open-sourcing their models, they’re essentially giving away billions in training costs to prevent others from building moats. LLaMA-13B outperforms GPT-3 (175B) on most benchmarks, and LLaMA-65B is competitive with the best models, yet Meta distributes these models for free. This isn’t altruism – it’s a strategic move to commoditize the foundation model layer and force competitors to compete on applications rather than model performance 29, 30, 31.

The Plateau Problem

The excitement around new model releases has notably cooled. While companies continue pushing out incremental improvements, the transformative leaps that characterized 2022-2024 have become less frequent. Claude’s monthly active users actually decreased by 15% from November 2024 to January 2025, dropping from 18.8 million to 16 million users, suggesting that even well-regarded models are facing user retention challenges as the novelty wears off 32.

The competitive dynamics make profitability worse, not better. Claude Opus 4 costs roughly seven times more per million tokens than GPT-5 for certain tasks, creating immediate pressure on Anthropic’s enterprise pricing strategy. This isn’t just about compute efficiency – it’s about a pricing war that’s compressing margins across the entire industry while open-source alternatives offer comparable performance at zero licensing cost 33.

The Unit Economics Problem

The fundamental challenge becomes clearer when you examine the cost structure. Every time someone uses ChatGPT, OpenAI pays for compute on Microsoft’s Azure infrastructure. Every conversation with Claude costs Anthropic money in AWS compute. Unlike traditional software where marginal costs approach zero at scale, AI models have substantial marginal costs for every interaction 34.

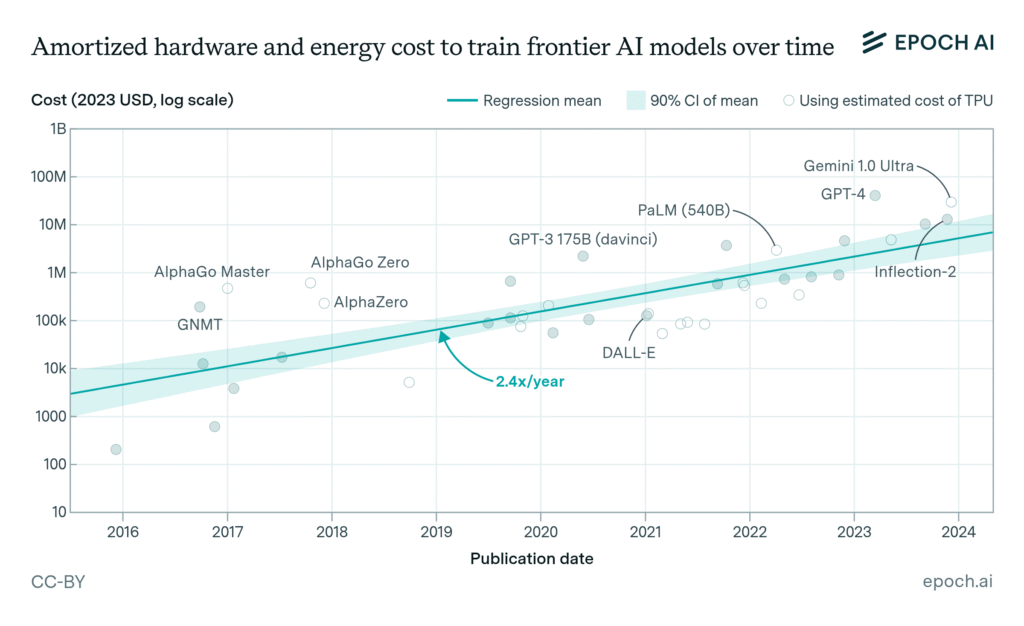

Training costs alone reveal the scale of capital required. Meta’s Llama models required at least $3.9 million in training costs for Llama 2 70B model as estimated, with total costs for larger models increasing significantly 35. And that’s just the initial training – ongoing inference costs for serving millions of users dwarf training expenses.

Companies aren’t just running single models either. They’re running multiple model versions, constant retraining cycles, safety testing, and experimental features – all of which add to the cost base without directly generating revenue. Anthropic CEO Dario Amodei recently stated that each individual AI model can already be profitable, but this overlooks the broader business reality of maintaining competitive AI services 36.

| Year | Model Name | Model Creators/Contributors | Training Cost (USD) Inflation-Adjusted |

|---|---|---|---|

| 2017 | Transformer | $930 | |

| 2018 | BERT-Large | $3,288 | |

| 2019 | RoBERTa Large | Meta | $160,018 |

| 2020 | GPT-3 175B (davinci) | OpenAI | $4,324,883 |

| 2021 | Megatron-Turing NLG 530B | Microsoft/NVIDIA | $6,405,653 |

| 2022 | LaMDA | $1,319,586 | |

| 2022 | PaLM (540B) | $12,389,056 | |

| 2023 | GPT-4 | OpenAI | $78,352,034 |

| 2023 | Llama 2 70B | Meta | $3,931,897 |

| 2023 | Gemini Ultra | $191,400,000 |

Sources: Training Costs of AI Models

What’s emerging is a market where revenue growth doesn’t translate to a path to profitability, where open-source alternatives are becoming genuinely competitive, and where the next breakthrough in model capabilities feels less inevitable than it did two years ago. The foundation model business isn’t failing, but the economics are proving far more challenging than the early valuations suggested 37.

The Application Layer – Where AI Meets Enterprise

The enterprise software layer presents a different economic picture: companies are finding ways to charge premiums for AI features, but the question of actual value delivery is more complex than the headlines suggest.

Microsoft’s Copilot has achieved remarkable penetration, with 79% of surveyed companies using it and more than 60% of Fortune 500 companies adopting it by early 2024 38, 39. At $30 per month per enterprise seat and $20 for individuals 40, the pricing represents a 50-75% premium over base Office 365 subscriptions.

The ROI numbers vary significantly. Microsoft commissioned studies showing up to 353% ROI for small and medium businesses using Copilot 41, while analysis shows a 9.4% increase in revenue per seller and 20% increase in close rates among heavy Copilot users 42. However, these numbers come from Microsoft-sponsored research, which introduces obvious selection bias in the results.

Sources: The State of AI: Global survey | McKinsey

Independent research presents a more nuanced view. Deloitte’s enterprise AI study found that almost all organizations report measurable ROI with generative AI in their most advanced initiatives, with 20% reporting ROI exceeding 30% 43. But the key phrase here is “most advanced initiatives” – these represent the best-case scenarios, not typical deployments.

The Feature vs. Product Dilemma

The challenge for established software companies lies in pricing strategy. Competition exists between vendors wanting to charge per user for AI features and IT departments that believe generative AI capabilities should be included in existing pricing 44. This tension is playing out across the industry.

Salesforce exemplifies this approach. The company recently raised prices by an average of 6% for Enterprise and Unlimited SKUs of Sales Cloud, Service Cloud, and Field Service, justifying the increase with increased AI integration 45. The strategy is straightforward: bundle AI features with existing products, then use those features to justify price increases.

Here is a table summarizing the main enterprise software AI pricing models—stand-alone, bundled, and usage-based—based on recent 2025 analysis and industry data:

| Model Type | Description | Typical Use Cases | Advantages | Challenges | Typical Pricing Terms |

|---|---|---|---|---|---|

| Stand-alone | AI features charged separately from core software, often per user or fixed fee | AI copilots, vertical AI tools | Transparent AI value, flexible adoption | Adds to total cost, potential siloing | $20–$30 per user/month or fixed fees |

| Bundled | AI features included with broader software subscriptions/licenses | Productivity suites, CRM platforms | Seamless user experience, easier procurement | Less pricing transparency, limited flexibility | Included in base subscription fee (e.g., Office 365 Copilot bundle) |

| Usage-based | Pricing based on consumption metrics like API calls, GPU hours | LLM APIs, real-time inference, generative AI | Pay for actual value, highly scalable | Unpredictable billing, need for governance | $3–$10 per 1K API calls or GPU hour |

Sources:

– AI software pricing models overview, detailed guide to enterprise AI pricing strategies (SparkoutTech 2024)

– Industry guide on subscription, usage, outcome, and hybrid AI pricing models (AnyReach.ai 2025)

– Real-world usage-based pricing complexities and tiers (Phyniks 2025)

– AI cost trends and value-based pricing considerations (Zylo 2025)

Recent market analysis shows most vendors are moving toward hybrid combinations, blending subscription or bundled elements with some usage-based or outcome-linked fees. “Outcome-based” and “hybrid” models—where enterprises pay per success metric or mix flat and variable fees—are emerging for advanced or large-scale deployments, especially when tying ROI directly to business results.

Vertical Specialization Shows Promise

Where enterprise AI applications demonstrate clearer value is in specialized vertical use cases. Companies building AI-powered legal research tools, code generation platforms, and industry-specific workflows can charge substantial premiums because they deliver measurable productivity gains in high-value tasks.

Current adoption patterns show 55% of US organizations using AI, but only 10% have extensive AI use, while 26% are in early pilot stages 46. This suggests most companies are still figuring out how to extract value from AI tools rather than fully integrating them into workflows.

The application layer represents the closest thing to sustainable AI business models we’ve seen so far. Companies can charge premiums, customers are seeing measurable benefits in specific use cases, and the value proposition doesn’t require massive ongoing infrastructure investments. But the challenge remains: determining which AI features justify premium pricing and which should be table stakes in modern software.

The Real Winners

After following the money through $100 billion in venture funding, hardware margins, foundation model burn rates, and hidden infrastructure plays, the picture becomes clear: the AI gold rush is generating massive profits, just not where most people are looking.

NVIDIA remains the undisputed winner, collecting tolls on every AI workload while maintaining 73% gross margins on hardware that companies desperately need today. The consulting giants – Accenture, McKinsey, Deloitte – are quietly generating hundreds of millions helping enterprises implement AI systems they can’t build themselves. Infrastructure companies like Databricks and Pinecone are capturing value by providing the specialized databases and platforms that make AI applications possible. Meanwhile, the companies getting the headlines – OpenAI, Anthropic, and their competitors – are still searching for sustainable unit economics.

The enterprise software layer shows the most promise for sustainable AI monetization. Companies like Microsoft are successfully charging premiums for AI features, while specialized vertical applications demonstrate clear ROI in high-value use cases. But even here, the jury’s still out on how much of AI becomes a premium feature versus table stakes.

The lesson isn’t that AI is overhyped – the technology is genuinely transformative. The lesson is that in any gold rush, the companies selling essential services usually get rich while the miners take the risk. Right now, NVIDIA is selling the shovels, consulting firms are selling the maps, and infrastructure companies are selling the transportation. The foundation model companies are still digging, burning through billions while hoping to strike gold.

The AI boom is real, the money is flowing, and some companies are getting very rich. They’re just not necessarily the ones you read about every day.

- NVIDIA market capitalization September 2025: https://companiesmarketcap.com/nvidia/marketcap/

- OpenAI 2024 financials and valuation estimate: OpenAI sees $5 billion loss this year on $3.7 billion in revenue

- NVIDIA official press release: https://nvidianews.nvidia.com/news/nvidia-announces-financial-results-for-first-quarter-fiscal-2026

- https://www.mintz.com/insights-center/viewpoints/2166/2025-03-10-state-funding-market-ai-companies-2024-2025-outlook

- AI Companies Receive 42% of US Venture Capital Investment

- https://epoch.ai/data-insights/ai-companies-revenue

- MLQ.ai Q4 2024 Earnings Report: https://mlq.ai/stocks/nvda/q4-2024-earnings

- NVIDIA Fiscal 2024 Financial Report: NVIDIA Corporation – NVIDIA Announces Financial Results for Fourth Quarter and Fiscal 2024

- Jarvislabs H100 2025 Price Guide: https://docs.jarvislabs.ai/blog/h100-price

- Jarvislabs H200 2025 Price Breakdown: https://docs.jarvislabs.ai/blog/h200-price

- SiliconANGLE report via Techzine about Meta plans to buy GPUs: https://www.techzine.eu/news/devices/115505/meta-plans-to-buy-350000-nvidia-gpus-to-compete-in-ai-arms-race/

- Meta aiming for 600,000 H100 equivalents including own chips: https://www.socialmediatoday.com/news/meta-develops-own-chips-for-expanded-ai-development/742254/

- Bloomberg on NVIDIA’s AI chip market dominance: https://www.bloomberg.com/news/articles/2025-05-20/nvidia-s-hopper-blackwell-ai-chips-are-market-leaders-can-intel-amd-compete

- PatentPC AI Chip Market Explosion NVIDIA dominance 2025: https://patentpc.com/blog/the-ai-chip-market-explosion-key-stats-on-nvidia-amd-and-intels-ai-dominance

- Seeking Alpha Nvidia AI Dominance and Margins 2025: https://seekingalpha.com/article/4804906-nvidias-ai-dominance-unstoppable-growth-engine-vs-overvalued-hype

- TSMC Q3 2025 Revenue Analysis: https://beth-kindig.medium.com/tsm-stock-and-the-ai-bubble-40-ai-accelerator-growth-fuels-the-valuation-debate-5be17c56449f

- AInvest TSMC AI Revenue Report: https://www.ainvest.com/news/tsmc-q3-2025-profit-surge-ai-driven-semiconductor-demand-long-term-valuation-implications-2510/

- Technology Magazine ASML Revenue Forecast: https://technologymagazine.com/articles/asml-projects-60bn-revenue-on-ai-chip-demand-explained

- PatentPC Chip Equipment Analysis: https://patentpc.com/blog/top-chip-making-equipment-companies-asml-applied-materials-and-lam-research-market-data

- TechPowerUp TSMC Packaging Capacity Report: TSMC Reserves 70% of 2025 CoWoS-L Capacity for NVIDIA | TechPowerUp

- OpenAI forecasts $13bn in revenue as AI boom continues: https://www.proactiveinvestors.co.uk/companies/news/1078158/openai-forecasts-13bn-in-revenue-as-ai-boom-continues

- Anthropic hits $3 billion in annualized revenue on business demand for AI: https://www.cnbc.com/2025/05/30/anthropic-hits-3-billion-in-annualized-revenue-on-business-demand-for-ai.html

- Anthropic revenue tied to two customers as AI pricing war threatens margins: https://venturebeat.com/ai/anthropic-revenue-tied-to-two-customers-as-ai-pricing-war-threatens-margins/

- Analysis of Anthropic and OpenAI profitability challenges: https://www.wheresyoured.at/anthropic-and-openai-have-begun-the-subprime-ai-crisis/

- A Look at OpenAI’s Economics and AI Cost Efficiency: https://raphaelledornano.medium.com/a-look-at-openais-economics-4f5ba6f75d34

- DeepSeek AI Statistics and Facts: https://seo.ai/blog/deepseek-ai-statistics-and-facts

- DeepSeek Debates on cost and performance: https://semianalysis.com/2025/01/31/deepseek-debates/

- Alibaba Qwen3-Max release details: Qwen

- Meta’s strategy for open-sourcing LLaMa: https://blog.hippoai.org/metas-strategy-for-open-sourcing-llama-a-detailed-analysis-hippogram-27/

- LLaMA outperforming GPT-3 benchmarks: https://arxiv.org/abs/2302.13971

- Open Source AI path forward at Meta: https://about.fb.com/news/2024/07/open-source-ai-is-the-path-forward/

- Claude AI monthly active users Nov 2024 – Jan 2025: https://analyzify.com/statsup/anthropic

- GPT-5 vs Claude Opus 4 pricing comparison: https://apidog.com/blog/gpt-5-vs-claude-opus/

- The Economics of Infinite Intelligence – Hacking Economics: https://www.hackingeconomics.com/p/the-economics-of-infinite-intelligence

- Visualizing Training Costs of AI Models: https://www.visualcapitalist.com/training-costs-of-ai-models-over-time/

- Anthropic CEO Dario Amodei on AI model profitability: https://officechai.com/ai/each-individual-ai-model-can-already-be-profitable-anthropic-ceo-dario-amodei/

- The Economics of Foundation Models – InvestingInAI: https://investinginai.substack.com/p/the-economics-of-foundation-models

- Microsoft Ignite 2024: Copilot adoption: https://news.microsoft.com/en-hk/2024/11/20/ignite-2024-why-nearly-70-of-the-fortune-500-now-use-microsoft-365-copilot/

- Winsome Marketing: https://winsomemarketing.com/ai-in-marketing/microsofts-copilot-ai-is-rewriting-enterprise-productivity

- Team GPT Microsoft Copilot Pricing: https://team-gpt.com/blog/copilot-pricing

- Microsoft 365 Blog Forrester ROI Study: https://www.microsoft.com/en-us/microsoft-365/blog/2024/10/17/microsoft-365-copilot-drove-up-to-353-roi-for-small-and-medium-businesses-new-study/

- Microsoft Tech Community Copilot Analytics: https://techcommunity.microsoft.com/blog/microsoft365copilotblog/microsoft-365-copilot-year-in-review–2024/4359781

- Deloitte State of Generative AI in the Enterprise 2024: https://www.deloitte.com/us/en/what-we-do/capabilities/applied-artificial-intelligence/content/state-of-generative-ai-in-enterprise.html

- Deloitte report on AI software pricing tensions: https://www.deloitte.com/us/en/insights/industry/technology/technology-media-and-telecom-predictions/2024/generative-ai-enterprise-software-revenue-prediction.html

- Salesforce price increase for AI features: https://www.theregister.com/2025/06/17/salesforce_ai_prices/

- Surveil report on US AI adoption stages: https://surveil.co/accelerating-ai-adoption-microsoft-copilot-trends-insights/

Leave a Reply